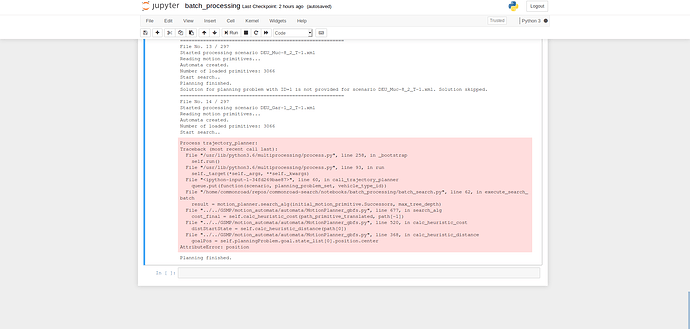

Hi, I am getting an error when I just run the default script. Also, it happens mostly on scenario 14. and then it never moves ahead. Also, nothing happens at all when I change the planner_id to A* from gbfs.

Hi,

The previous version of batch upload did not handle the cases with errors. Please check out the latest version at commonroad search and let me know if you still face the issue. Also, changing the ID of planner should work now (you can set that in the configuration file)

Having that said, the newer version will only prevent the code from terminating due to errors. In your case, you tried to access the position attribute of the first state in the state list of the goal; however, in this scenario the goal state does not have a ‘position’ attribute, thus the planner throws an error. A solution to that is to first inspect whether the goal state possesses a specific attribute by using hasattr() function.

Thanks for the reply. Also, I downloaded and work through VM Image. I guess that version of commonrad is different from the one currently online. How do I update that in an easy manner without breaking installation ?

You could try to execute git pull in the commonroad-search directory to pull the newest files.

Hi, I tried git pull and got this error and it aborted.

error: Your local changes to the following files would be overwritten by merge:

notebooks/tutorials/.ipynb_checkpoints/tutorial_commonroad-search-checkpoint.ipynb

notebooks/tutorials/tutorial_commonroad-search.ipynb

Please commit your changes or stash them before you merge.

error: The following untracked working tree files would be overwritten by merge:

GSMP/motion_automata/automata/MotionPlanner_gbfs_only_time.py

pdfs/0_Guide_for_Exercise.pdf

pdfs/1.Brief_Introduction_to_CommonRoad_io.pdf

scenarios/exercise/CHN_Sha-10_2_T-1.xml

scenarios/exercise/CHN_Sha-11_3_T-1.xml

scenarios/exercise/CHN_Sha-11_4_T-1.xml

scenarios/exercise/CHN_Sha-12_2_T-1.xml

scenarios/exercise/CHN_Sha-13_2_T-1.xml

scenarios/exercise/CHN_Sha-14_2_T-1.xml

scenarios/exercise/CHN_Sha-15_3_T-1.xml

scenarios/exercise/CHN_Sha-15_4_T-1.xml

scenarios/exercise/CHN_Sha-16_2_T-1.xml

scenarios/exercise/CHN_Sha-17_2_T-1.xml

scenarios/exercise/CHN_Sha-1_5_T-1.xml

scenarios/exercise/CHN_Sha-1_6_T-1.xml

… (A few more scenarios)

Aborting

So I tried doing git add * ; git stash; but that also did not work as it was suggested by a stack overflow answer… Kindly suggest something.

Hi, it worked according like this :

git reset --hard HEAD

git pull

I do not know if this was the right way or not but it works.

This is the right way. by git reset you basically discarded all you changes, which made git pull possible in your case. please let me know if you can smoothly work with the newer version of batch processing. thanks!

Yes, it worked. When I run the batch processing new script, it runs on all scenarios. Just wanted to ask that it found 30 solutions out of 300 with A* and gbfs with default config (SM1 cost fn I think). So I do not know how to solve the default 102 solutions!

That was computed based on a 120 seconds time out. Also, the survival scenarios were solved using the new planner (gbfs_only_time). I could solve around 40 with regular gbfs and around 60 with gbfs_only_time, which added up to be 102 for me. (It is also possible to combine the regular gbfs and only time into one planner by inspecting the type of the goal state and calculate heuristics accordingly). The cost functions (SM1, etc) does not affect the search for solution, it is only used to evaluate the performance in the benchmark.

Thanks for the reply. I downloaded the 27/11 vm but facing this error. When I run any ipynb the memory just gets full and seems like there is no option to increase the memory allocated to that virtual machine. Kindly help!!

Hi, you should be able to adjust the memory in the setting panel of Virtual Box, under ‘system’.

You could also try to change the value for max_tree_depth in batch_processing_config.yaml.

Well, I tried but did not work! Maybe wil figure somehting out !!

Also, just one more doubt, when we run tutotial_commonroad_search.ipynb for one scenario, if we find a solution irrespective of the cost, we can term that as 1 of those 110 scenarios right ?

Yes, that’s acceptable.

Thanks a lot! One more out of the topic thing ,is there a way we could use some faster desktop in some lab or anywhere as my laptop just hangs most of the time after running a few scenarios ? Tried using Linux and Windows using Docker and VM both !! Thanks

Or use the function find_all_colliding_objects to drastically improve collision checking performance.  Python loops are slow.

Python loops are slow.

Vitali thank you for your hint, where do we can find this function ?