Hi

I have installed Commonroad all using >pip install commonroad-all==0.0.2 and commonroad_rl using pip install commonroad-rl==2023.1.3 . My python version is 3.7.13 and gym version is 0.21.0. But I get the following error. I looked at a similar issue but looks like all things are upto date as per that issue , link to the issue >> Minimal example, commonroad_rl/gym_commonroad/configs.yaml not found

I am trying the basic example provided to run the gym environment using the following code.

import gym

import commonroad_rl.gym_commonroad

# kwargs overwrites configs defined in commonroad_rl/gym_commonroad/configs.yaml

env = gym.make("commonroad-v1",

action_configs={"action_type": "continuous"},

goal_configs={"observe_distance_goal_long": True,

"observe_distance_goal_lat": True},

surrounding_configs={"observe_lidar_circle_surrounding": True,

"lidar_circle_num_beams": 20},

reward_type="sparse_reward",

reward_configs_sparse={"reward_goal_reached": 50.,

"reward_collision": -100.})

observation = env.reset()

for _ in range(500):

# env.render() # rendered images with be saved under ./img

action = env.action_space.sample() # your agent here (this takes random actions)

observation, reward, done, info = env.step(action)

if done:

observation = env.reset()

env.close()

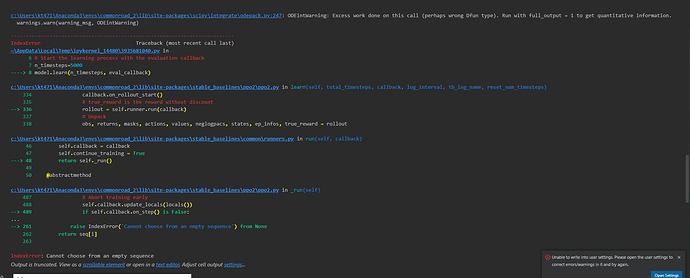

Error I get

`---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

~\AppData\Local\Temp\ipykernel_26200\2157516103.py in

8 surrounding_configs={“observe_lane_circ_surrounding”: True, “lane_circ_sensor_range_radius”: 100.},

9 reward_type=“sparse_reward”,

—> 10 reward_configs_sparse={“reward_goal_reached”: 50., “reward_collision”: -100})

11

12 observation = env.reset()

c:\Users\Anaconda3\envs\commonroad_2\lib\site-packages\gym\envs\registration.py in make(id, **kwargs)

233

234 def make(id, **kwargs):

→ 235 return registry.make(id, **kwargs)

236

237

c:\Users\Anaconda3\envs\commonroad_2\lib\site-packages\gym\envs\registration.py in make(self, path, **kwargs)

127 logger.info(“Making new env: %s”, path)

128 spec = self.spec(path)

→ 129 env = spec.make(**kwargs)

130 return env

131

c:\Users\Anaconda3\envs\commonroad_2\lib\site-packages\gym\envs\registration.py in make(self, kwargs)

88 else:

89 cls = load(self.entry_point)

—> 90 env = cls(_kwargs)

91

92 # Make the environment aware of which spec it came from.

c:\Users\Anaconda3\envs\commonroad_2\lib\site-packages\commonroad_rl\gym_commonroad\commonroad_env.py in init(self, meta_scenario_path, train_reset_config_path, test_reset_config_path, visualization_path, logging_path, test_env, play, config_file, logging_mode, **kwargs)

96 if kwargs is not None:

97 for k, v in kwargs.items():

—> 98 assert k in self.configs, f"Configuration item not supported: {k}"

99 # TODO: update only one term in configs

100 if isinstance(v, dict):

AssertionError: Configuration item not supported: reward_configs_sparse

``