The xml only contain the initial state of the agent in the planning problem, could we get the human trajectory for this agent ?

In the scenarios from our repository, the trajectory from the planning problem is not available but you could use the trajectories of all other vehicles in the scenarios. Many scenarios are obtained from SUMO and not from human drivers though.

If you’re interested in human trajectories, you may have a look at the converters for other datasets that are based on real traffic data https://commonroad.in.tum.de/dataset-converters.

Hi, usually imitation learning requires that the state and action representations of human trajectory match the (state, action) representations defined in the environment. It seems the [dataset-converters] only converts the dataset to some profile configuration. How can we get a human trajectory, i.e., a (state, action, reward) sequence, such that the state is not just x y locations but some feature vector returned by the environment, and get the corresponding reward as well?

Hi miyunluo,

You can only get a trajectory defined in CommonRoad format from the dataset converter, i.e., the position, orientation, velocity, and accelerations. If you need a (state, action, reward) sequence, you can use the accelerations as the actions for point-mass vehicle model, and step the env using those given actions, and store the returned observations and rewards. This should be rather easy to implement. If you want to imitate other vehicle models, you would need to calculate the actions from the vehicle states. Hope it helps.

Best,

Xiao

Thanks a lot. In terms of implementing your suggestion, I am not entirely sure how to do, for example:

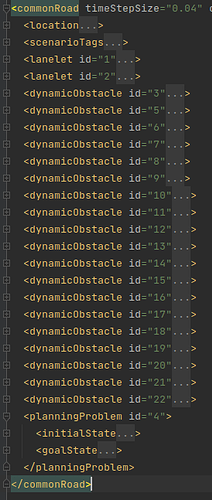

After applying the data converter, I get a .xml file for each .csv in the highD dataset, and in each .xml , we have multiple <lanelet >, <dynamicObstacle > and <planning problem> objects containing only the x and y locations. How should we interpret these objects and obtain the corresponding x,y accelerations ?

Dear Galen,

Each converted DynamicObstacle should have position, velocity, orientation, time_step, as shown here, you can add acceleration in this line (acceleration=a). Then the converted DynamicObstacle will also contain acceleration. For the point-mass model, you need to project the acceleration in x and y direction, extract all the accelerations, normalize them, and step the env using the normalized actions to collect the (observation, action, reward) pairs.

Best,

Xiao